Algorithm Governance Roundup #5 |

|

Community spotlight: Stiftung Neue Verantwortung | Rotterdam risk-scoring of welfare claimants

|

|

|

|

Welcome to March’s Roundup. We hope you enjoy this month’s community spotlight on Stiftung Neue Verantwortung about their work on auditing recommender systems for systemic risk using their risk-scenario-based audit method.

As a reminder, we take submissions: we are a small team who select content from public sources. If you would like to share content please reply or send a new email to algorithm-newsletter@awo.agency. Our only criterion for submission is that the update relates to algorithm governance, with emphasis on the second word: governance. We would love to hear from you! Many thanks and happy reading! AWO team

|

In Belgium, the AI4Belgium Coalition hosted a panel event, ‘Towards an ethical charter for the use of AI in public administration’. AI4Belgium is a grassroots community bringing together stakeholders in the public sector, private sector, academia and civil society. They have published a report with 5 strategic recommendations for a Belgium AI strategy. In Europe, Politico discussed how the release of ChatGPT and other Large Language Models has influenced the EU’s AI Act. Lead lawmakers in the European Parliament have proposed to categorise AI systems generating complex text without human oversight as 'high-risk'. They are also working to impose stricter requirements on the developers and users of such systems. In Germany, Stiftung Neue Verantwortung are working to analyse and evaluate the recommender systems of internet intermediaries. They have developed a risk-scenario-based audit method to operationalise audits for systemic risk, as per the Digital Services Act. They are planning to use this audit process to investigate TikTok's recommender system. In this month’s Community Spotlight, we interview the SNV team about auditing recommender systems for systemic risk using their risk-scenario-based audit method.In the Netherlands, a machine-learning system deployed in Rotterdam to risk-score welfare claimants has been assessed by journalists. The investigation found that being a parent, a woman, young, not fluent in Dutch, or struggling to find work all increased someone’s risk score. Lighthouse Reports and Wired obtained access to the machine learning model, training data, and user operation manuals enabling them to reconstruct the system. This is the first in Lighthouse Reports’ Suspicion Machines series, with investigations in Spain, Sweden, Ireland, France, Denmark, Italy and Germany to come. In the UK, the ICO has published further guidance on AI and data protection. The document discusses transparency, accountability and governance, and accuracy. It also has new chapters on fairness in AI, including guidance on solely automated decision-making and technical approaches to mitigate algorithmic bias, and fairness in the AI lifecycle. In the US, the Mozilla Foundation has announced the 2023 Mozilla Technology Fund Cohort. The 8 open-source projects focus on auditing AI systems and will contribute knowledge to Mozilla’s Open Source Audit Tooling (OAT) Initiative. Projects include AI Risk Checklists by Responsible AI Collaborative, Gigbox by The Worker’s Algorithm Observatory, and CrossOver by CheckFirst. OpenAI has released ChatGPT-4 and published a technical report and system card. The system card provides a high-level overview of the safety processes they adopted prior to deployment, arsTECHNICA discusses this document. Researchers can apply for access to OpenAI’s API. OpenAI has also launched Evals which is a framework for evaluating OpenAI models and an open-source registry of benchmarks. Unlike previous releases, OpenAI has not disclosed the content of GPT-4’s training set. The Verge, Vice and Nature have published articles discussing open-source AI research and the consequences of this decision.

|

Amnesty Tech is hiring a Strategic Communications and External Affairs Advisor. The advisor will develop and implement cutting-edge, innovative media and communications strategies related to technology and human rights. The role is based in London. Application deadline is 23 March.

The Ada Lovelace Institute is hiring a Senior Researcher to join their emerging technology and industry practice directorate. The researcher will lead a series of projects exploring the effectiveness of responsible AI and ethics and accountability practices. The role is based in London. Application deadline is 27 March.

The Engine Room is hiring a Senior Research Associate. The researcher will conduct research on risks to civil society posed by technology and data, the impacts of emerging technologies, and the ways in which movements can push for justice. The role is remote. Application deadline is 26 March.

AWO is hiring a Senior Associate for our Strategic Research and Insight team. This is a generalist role but may include work on on algorithm governance. The role is remote within UK, Belgium, France, Greece, Ireland, Italy or the Netherlands. Please read or forward to anyone whom you think will be interested! Application deadline is extended to 02 April.EDRi is electing two Board members. Board members will help shape the future of the organisation and the network, and advance EDRi’s mission to promote and protect human rights in the digital environment. Application deadline is 03 April.

|

MozFest 2023Virtual: 20 - 24 MarchThis festival has hundreds of workshops, including those on AI governance and ethics, which combine art, technology and community. The panels on AI governance include: Some workshops will be recorded. Tickets are available with a suggested minimum donation of $45. Launch of the European Centre for Algorithmic Transparency

Hybrid online and in-person: Seville, Spain, 18 April 11:00 - 13:15 CETThis event presents the European Centre for Algorithmic Transparency (ECAT). Speakers will discuss the challenges of building a safe online space and ECAT’s role supporting the Commission’s new regulatory tasks under the DSA and researching algorithmic transparency. Read our Community Spotlight on ECAT here.

|

|

Community Spotlight: Stiftung Neue Verantwortung

|

Stiftung Neue Verantwortung (SNV) is a non-profit think tank working on current political and societal challenges posed by new technologies. We spoke to the team who worked on Auditing Recommender Systems. Putting the DSA into practice with a risk-scenario-based approach, which provides a concrete method to operationalise the Digital Service’s Act audit provisions. This team includes the project director Dr Anna-Katharina Meßmer, technical expert Dr Martin Degeling and student assistants Alexander Hohlfeld and Santiago Sordo Ruz.

Q: Could you tell us about the impetus for SNV’s work on auditing recommender systems?SNV: Anna-Katharina and Alexander previously worked on a study on digital information and news literacy in Germany. We found that people had severe problems distinguishing between, for example, information and disinformation, or information and advertisements online. As we did press interviews for the project, we realised most people tend to focus on education and educational challenges. However, we thought that politics and platforms played an important role, particularly as huge problems stemmed from the platform’s design and recommender systems. At this time, the research on recommender systems was still unclear on what the actual problems and challenges were. This led us to start thinking about auditing platforms. It’s hard to imagine now, but back then very few people thought this was a relevant or interesting topic to work on! Nevertheless, we wrote a pitch and received funding for our work. The project started in early 2022, during the trilogues of the DSA. The passing of the DSA has generated a lot of interest in audits. This worked in our favour because we were able to come out with one of the first papers on risk assessments and audits for the DSA. Q: And how does this work relate to the DSA?

SNV: The DSA requires several types of audits and risk assessments. These fit into three categories: - First-party audits: Platforms must complete risk assessments for systemic risks, especially before they launch new products.

- Second-party audits: Platforms must contract with independent auditors to conduct compliance audits. These are focused on compliance with the DSA rather than audits in the technical sense.

- Third-party audits: Researchers and civil society organisations can conduct external risk assessments for systemic risks. Also, the European Commission and Digital Service Coordinators will have access to data to monitor compliance.

We see two main challenges with the DSA. Firstly, the conceptualisation and definition of audits and risk assessments are quite opaque and unclear. These abstract definitions mean that other researchers and people working in policy have different understandings of what an audit or a risk assessment is. Our paper clarifies how to bring DSA definitions together with definitions emerging from technical research. Secondly, the DSA is concerned with several systemic risks, but these are abstract and opaque as well. Therefore, we decided to think about how these abstract systemic risks can be broken down into testable hypotheses. This is why our auditing method for recommender systems takes a risk-scenario-based approach. Q: Could you tell us about this method in more detail?

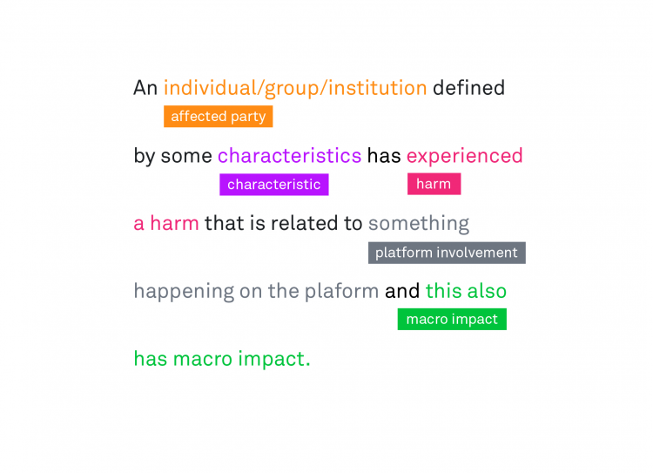

SNV: Our audit method is a four-step, risk-scenario-based audit process: First phase – planning. First, it is important to conceptualise the platform’s main strategy to optimise its recommender system(s) because this has a huge impact on the rest of the considerations. Other essential questions include: What type of media is the platform based on? What are the different technical products on the platforms? Who is the audience? Then it is important to define the stakeholders. We believe it is necessary to take a multi-stakeholder approach, including legislators, platforms, regulators, and especially users and civil society organisations. Second stage – define and prioritise risk scenarios. This is the core of our approach. At this point we break down a systemic risk into different layers using the following template:

|

|

We can illustrate this with the risk to mental well-being. This could manifest in hundreds of different scenarios. For example, the scenario could be the mental health of young adults (affected party) who have a personal crisis (characteristic) that are over-exposed to videos describing self-harm (harm), due to a TikTok algorithm (platform involvement). This contributes to the general mental health crisis in young adults (macro impact). Given that there are so many potential scenarios for the same risk, it is essential to prioritise which ones will be audited for. Third phase – developing measurements. This is the stage where we conduct the technical audits. It is necessary to tailor the audit approach to the specific platform elements involved in the process. If we were investigating the mental health of young adults on TikTok, we could conduct an API audit because TikTok has just announced API access for researchers. We may also want to do a sock-puppet or scraping audit instead and combine this with a survey to take the users’ perspective into account. When approaching a specific scenario, it is important to consider that each of these technical audit methods and measurements has certain benefits and limitations. Therefore, it is necessary to consider which are most useful in the scenario and combine them. Fourth phase – evaluation. Here we evaluate our measurements from the prior stage and report them in an audit report. We provide a template of this report in our research. Q: How did you develop this research?SNV: We began with a literature review and started to reach out for conversations with experts quite early on. This included technical experts we found through GitHub. It was challenging because there are a limited number of experts on audits and risk assessments in Germany. This meant we had to reach out to people all over Europe, the UK, and the US which has a particularly strong research scene. At SNV we work in collaboration with other institutions and organisations. For this project, we worked particularly closely with TrackingExposed who provided technical expertise. We went on to write a short input paper and invited organisations to a workshop to test our hypothesis and approach. We got a lot of useful and interesting feedback from organisations including AlgorithmWatch, Ada Lovelace Institute, Mozilla, Reset, ISD and Eticas. Based on this feedback and comments, we were able to write the final paper. This area of work involves the combination of many different disciplines and perspectives. At SNV, we aim to work as inter-disciplinarily as possible. Anna-Katharina and Alex have a background in sociology and communication science whilst Martin and Santiago have technical expertise with computer science backgrounds. Combining these different perspectives in policy, sociology, communications science, and computer science was an important challenge. Q: What are your thoughts on the developments of standards for algorithmic audit?

SNV: We are concerned by the lack of standardisation in the DSA. In this regard, we are looking forward to the delegated and implementing acts on data access and transparency, and independent audits. We are interested if these will include any templates or standards and what these will look like. One of the challenges of standardisation and audits is that they sometimes result in simple checklists of existing documentation, while missing the actual practice. In our opinion there are two main goals for standards. First, reproducibility. A lot of systemic risks mentioned in the DSA are the subject of existing research but, because of the wide array of methods and measurements, this research is not always comparable. Second, observability. Generally, transparency gives insights into a single point in time. We are interested in observing how platforms and their usage evolve and change every second, which means we need access to process data. Q: What work does SNV have coming up?

SNV: The next step for our team is to use our risk-scenario-based audit approach to conduct a third-party audit ourselves. We are currently deciding what scenario to focus on and are planning to examine TikTok’s recommender system using sock puppets as a start. Our brilliant colleague Dr Julian Jaursch is working on the project Platform regulation in Germany and the EU, which looks at the DSA. His work is especially focused on the Digital Services Coordinators and how the DSA will be translated into German law. This is going to be challenging because there is already pre-existing German legislation. |

|

:// Thank you for reading. If you found it useful, forward this on to a colleague or friend. If this was forwarded to you, please subscribe!

If you have an event, interesting article, or even a call for collaboration that you want included in next month’s issue, please reply or email us at algorithm-newsletter@awo.agency. We would love to hear from you!

|

You are receiving Algorithm Governance Roundup as you have signed up for AWO’s newsletter mailing list. Your email and personal information is processed based on your consent and in accordance with AWO’s Privacy Policy. You can withdraw your consent at any time by clicking here to unsubscribe. If you wish to unsubscribe from all AWO newsletters, please email privacy@awo.agency.

A W O

Wessex House

Teign Road

Newton Abbot

TQ12 4AA

United Kingdom

|

|

|

|

|

|

|